Published on 10/31/2024

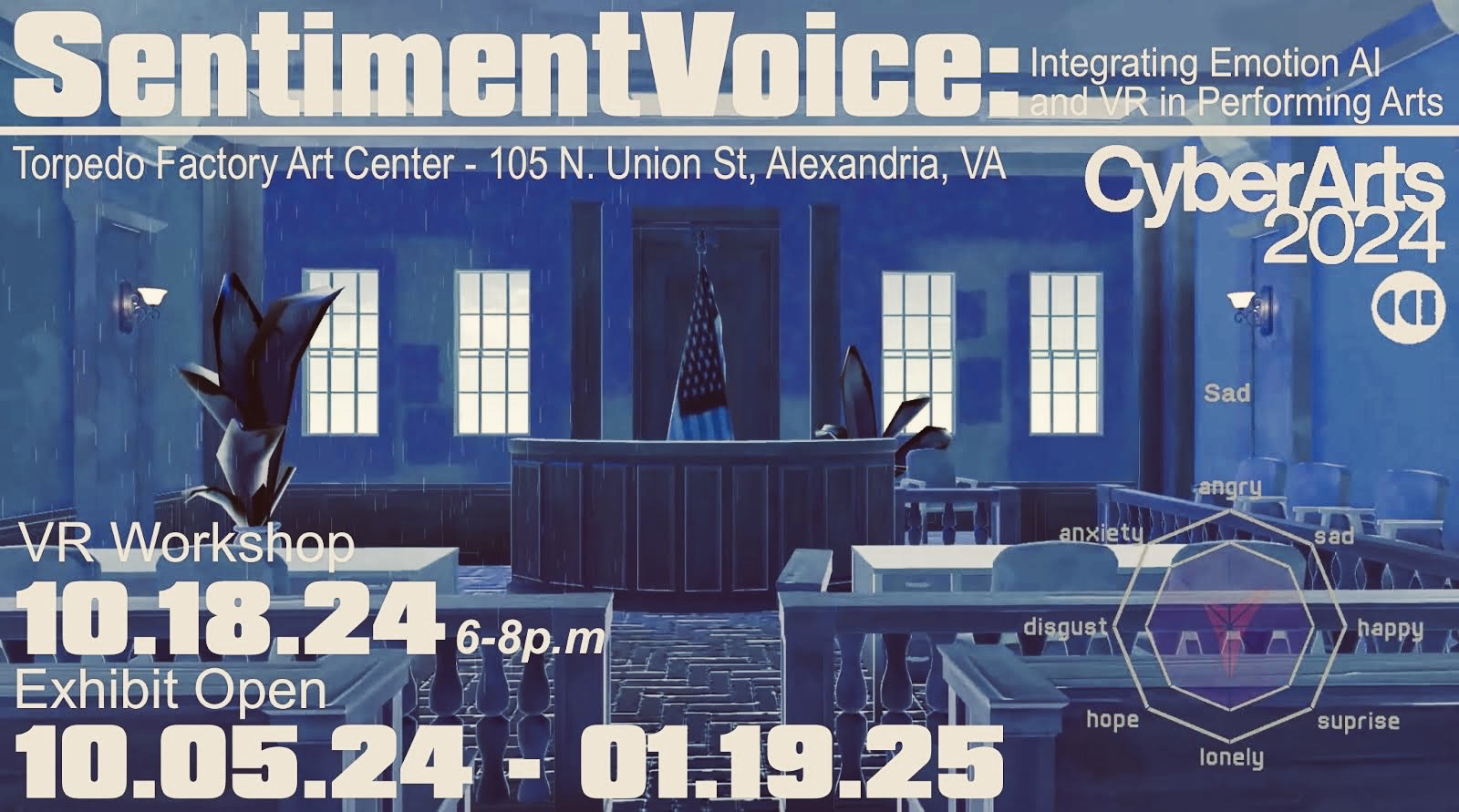

The SentimentVoice project, part of the 2024 Cyber Arts Exhibit at the Torpedo Factory Art Center in Alexandria, Virginia, is an innovative fusion of technology and art that sheds light on the often-overlooked vulnerabilities of emotion data in digital communications. This exhibit, running from October 5, 2024, to January 19, 2025, is a result of the Commonwealth Cyber Initiative (CCI), which encourages collaboration between cybersecurity researchers and the arts community across Virginia. The initiative, driven by a desire to explore cybersecurity issues creatively, brings together artists and academics from universities such as Virginia Tech, George Mason University, and Virginia Commonwealth University (VCU). SentimentVoice, one of the standout projects, uses cutting-edge Emotion AI and Virtual Reality (VR) to raise awareness about the risks of emotional surveillance in our hyperconnected world.

At its core, SentimentVoice seeks to investigate how emotion-tracking technologies—AI systems that analyze speech, facial expressions, and other data—can be used both for positive engagement, such as building empathy, and for more nefarious purposes, such as surveillance and manipulation. The project highlights a crucial and growing cybersecurity concern: how personal, emotional data in everyday digital interactions (chat, social media, and more) can be exploited by malicious actors.

Principal Investigators

SentimentVoice is spearheaded by Semi Ryu, Ph.D. a professor at VCU’s School of the Arts, Department of Kinetic Imaging, and Alberto Cano, Ph.D. an associate professor in VCU’s Department of Computer Science. This interdisciplinary team blends expertise in media arts, computer science, and AI technology to create a powerful performance art project that merges artistic expression with advanced technical research.

Bringing Emotion AI into the Spotlight

The performance aspect of SentimentVoice is where art meets tech in a captivating, immersive experience. During live performances, actors engage in emotionally charged storytelling, using real-life narratives gathered from immigrant communities in Richmond, Virginia. The stories reflect moments of transition and hardship, such as navigating airports, schools, and immigration offices. One actor wears a VR headset, while the other does not, establishing a dynamic interplay between the real and virtual spaces, as well as between past memories and current realities.

“Our artist group—me and my students—set out to collect stories of immigrants in the Richmond community. We looked for the emotional memories attached to locations so that we could connect it to the AI that Dr. Cano and his team were developing. We found lot of emotionally charged stories were associated with the airports, hospitals, school, emigration offices, and workplaces, from which both our beautiful VR environments and performance scripts came from,” says Dr. Ryu.

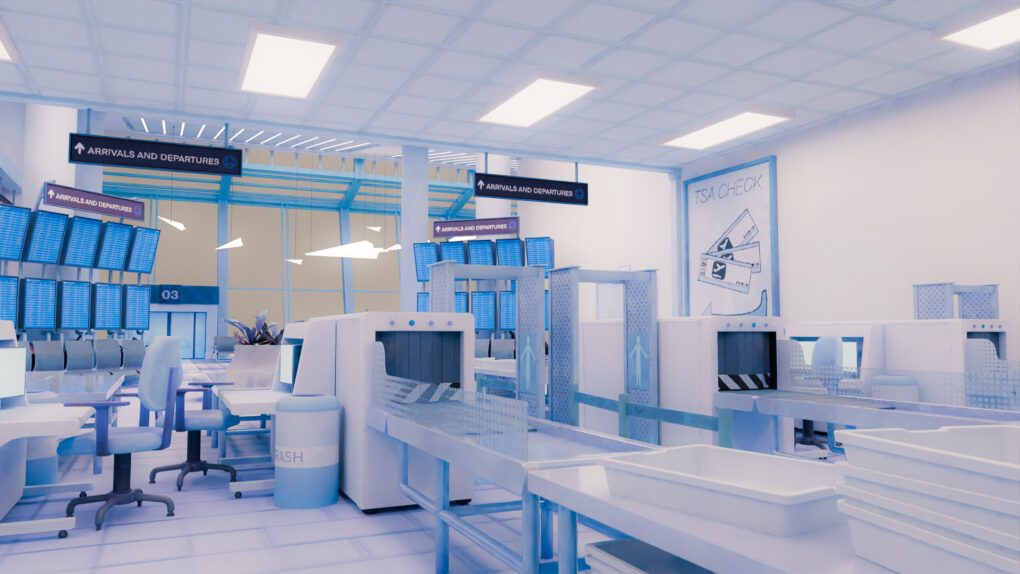

Screenshot from the VR experience in a TSA Check digital set. (Created by Matthew Labella)

As the actors speak, the emotion AI system tracks their speech, analyzing emotional content in real-time. The detected emotions trigger corresponding changes in the VR environment—altering visuals, sounds, and even light patterns within the virtual space. By visualizing the emotion-tracking process, SentimentVoice demonstrates how AI can extract emotional data from personal speech, highlighting the often-invisible surveillance mechanisms embedded in today’s digital platforms.

More screenshots from the VR experience resembling various locations from the immigrants’ experiences. (Created by Matthew Labella)

Methodology and Technical Exploration

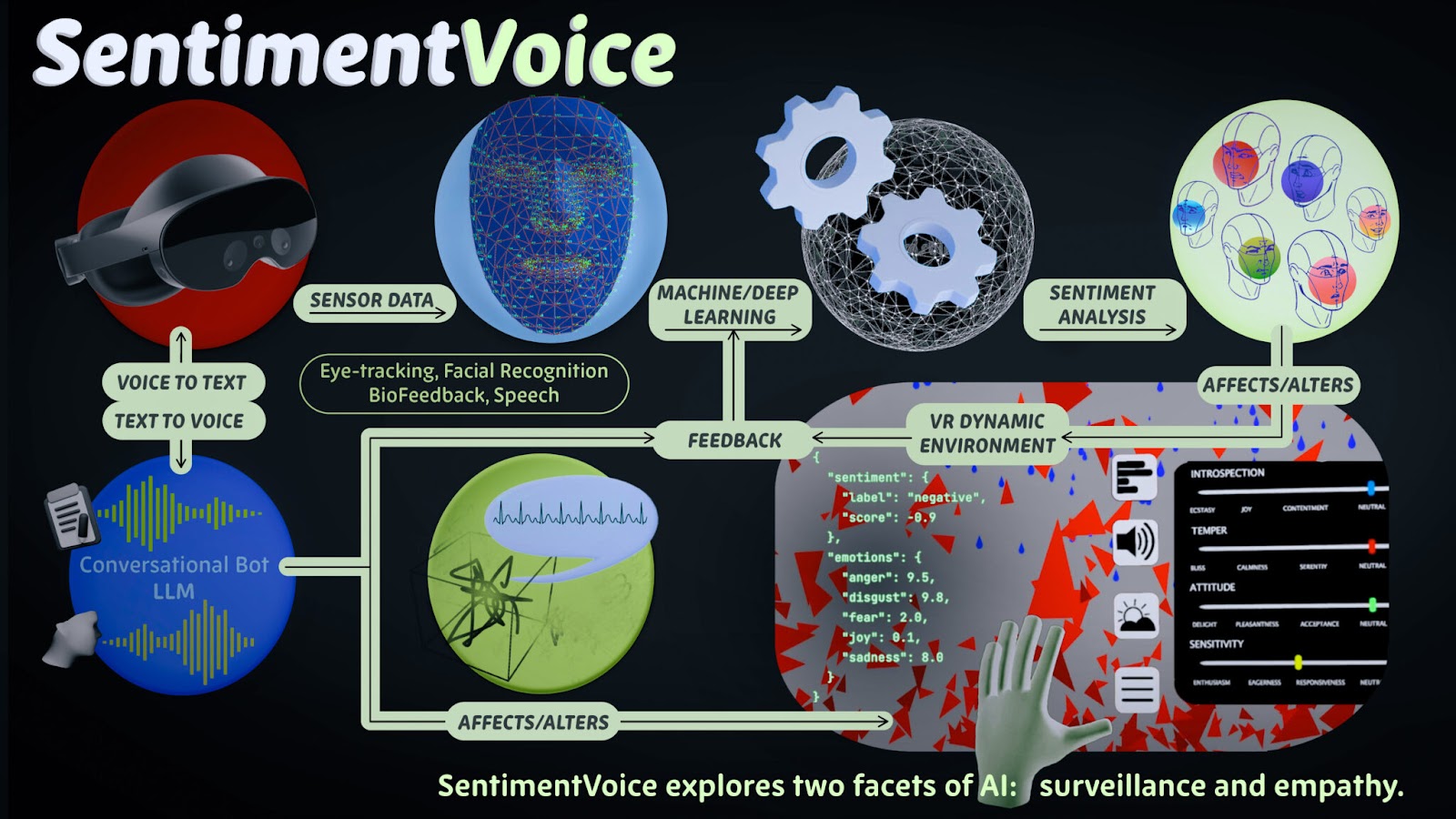

SentimentVoice is grounded in sophisticated technical exploration, using deep learning models to process and analyze emotional data from continuous speech. The system employs speech recognition, sentiment analysis, and voice texture analysis to detect emotional nuances in the performance. By experimenting with the robustness of these AI models against adversarial attacks—which could manipulate or deceive emotion-tracking systems—the researchers aim to better understand the security implications of emotion AI in real-world applications.

The project’s methodology is twofold:

Live Performance: SentimentVoice leverages emotion AI to actively respond to the performer’s speech during live storytelling, creating an interactive, evolving VR space. This real-time interaction allows the audience to see how speech is converted into emotional data and how that data can be used or misused.

Public Participation: After the live performances, the installation becomes interactive, allowing attendees to engage with the AI by sharing their own stories, further exploring how emotion data is collected and interpreted.

Technical diagram outlining the technology that went into Sentiment Voice. (Created by Rayna Hugo)

Artistic and Cybersecurity Implications

SentimentVoice taps into a broader conversation about the ethics and security risks of emotion-tracking technologies, which are becoming increasingly prevalent in both commercial and governmental applications. Technologies such as lie detectors, facial recognition software, and emotion AI are often used to monitor behavior in ways that disproportionately affect vulnerable communities, including immigrants and minorities.

“Often the sciences and arts develop divergently, so we have to seek out these opportunities for connection and intersection. And it is very difficult because of the variance in language and ways of thinking that we have, but in the end of the day the resulting combinations of our perspectives is yields invaluable results as it is the case here,” said Dr. Cano.

However, the project also explores how these same technologies can be subverted to foster empathy and understanding, offering a counter-narrative to their often oppressive use. By turning emotion AI into a tool for art and storytelling, SentimentVoice not only brings attention to the potential for misuse but also showcases the technology’s capacity for positive social impact.

Outcomes and Broader Impact

Through SentimentVoice, Dr. Ryu, Dr. Cano, and their teams hope to spark public dialogue about the ethical dimensions of AI and emotion-tracking systems. The project’s outcomes include:

- The development of an emotion-tracking AI system designed to analyze human speech and emotional content.

- A live performance series at the Cyber Arts Exhibit, where audiences can witness firsthand how AI interprets emotion in real-time.

- An interactive VR installation that allows public participants to engage with the emotion AI, deepening their understanding of how their own emotional data could be used.

- Video documentation of the live performances, which will be presented at international conferences and exhibitions focused on AI, cybersecurity, and new media.

The researchers plan to disseminate their findings through academic and artistic channels, including conferences such as ACM, IEEE, and Siggraph XR. These presentations will further bridge the gap between technical and creative disciplines, fostering ongoing collaboration between cybersecurity experts and artists.

SentimentVoice, as part of the Cyber Arts Exhibit, illustrates how cybersecurity can intersect with art to create a thought-provoking exploration of modern technology’s impact on privacy and emotion. By highlighting the risks and opportunities presented by emotion AI, this project not only advances technical knowledge but also encourages audiences to reflect on the implications of living in a world where our emotions are as trackable as our clicks. Through immersive VR storytelling, SentimentVoice ultimately transforms emotion AI from a tool of surveillance into a medium for empathy and connection, challenging us to think more critically about the data we share—knowingly or unknowingly—every day.